The Slow Website Problem

Aditi was excited about her new blog. She posted high-quality content, but visitors complained about slow loading times.

Her developer explained the issue: Every time someone visited, the website fetched data from the database. That took time.

The solution? Caching. By storing frequently accessed data closer to the user, responses became nearly instant.

What is Caching?

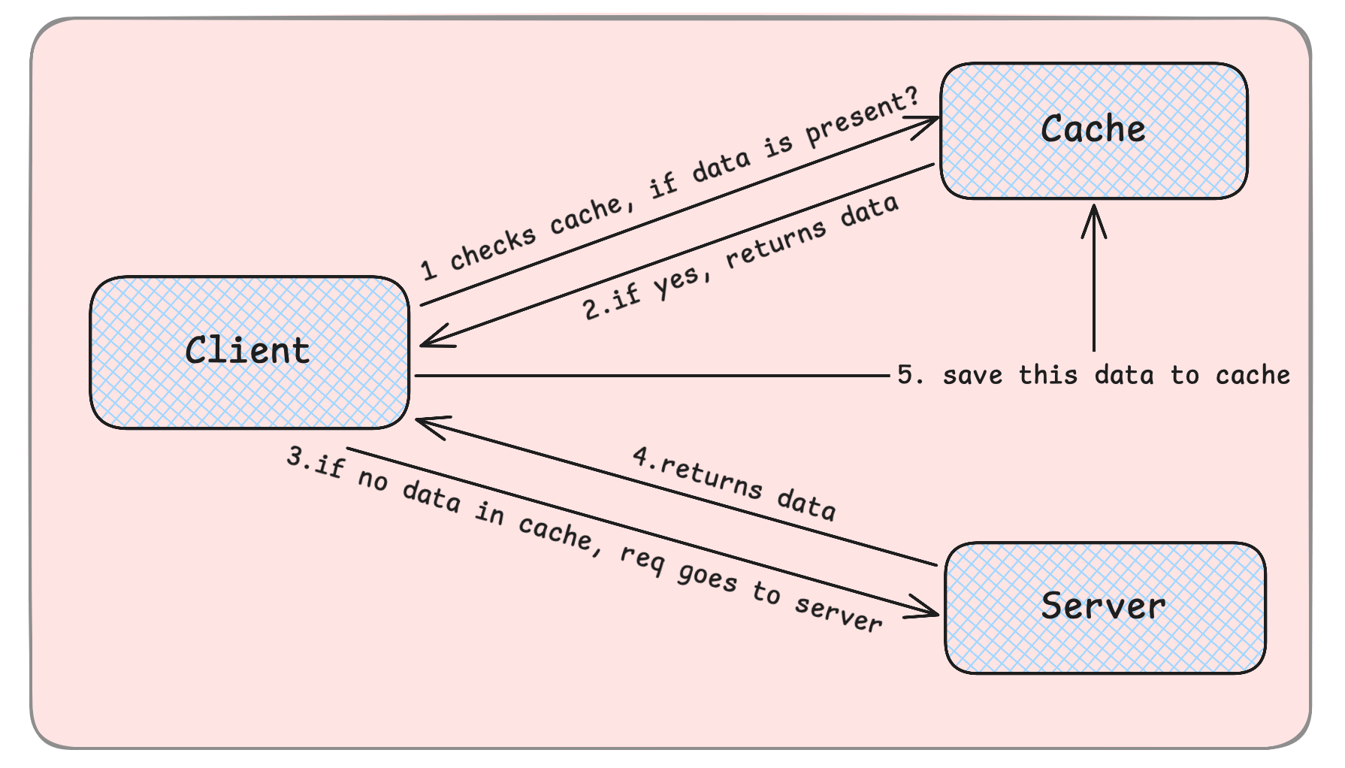

Caching is the process of storing copies of frequently accessed data in a fast-access location.

Instead of fetching data from a slow database or remote server, the system serves data from the cache, improving response times.

Why Cache?

Speed – Reduces response time significantly.

Reduced Load – Relieves database and server pressure.

Cost Efficiency – Minimizes expensive database queries.

Scalability – Handles high traffic efficiently.

Without caching, every request queries the database, slowing everything down.

Types of Caching

1. Client-Side Caching

Stored in the user's browser (e.g., images, CSS, JavaScript).

Example: A website logo loads instantly because it's cached locally.

2. Server-Side Caching

Stored on the backend to reduce database queries.

Example: Frequently accessed user profiles are cached in memory.

3. Distributed Caching

Data is cached across multiple servers for scalability.

Example: Netflix caches trending movies across data centres.

Cache Invalidation – Keeping Data Fresh

Caching is great, but what if data changes? Cache Invalidation removes stale data.

1. Time-Based Expiry (TTL – Time to Live)

Cached data expires after a set time.

Example: Weather data refreshes every 30 minutes.

2. Write Invalidation

The cache updates when data is modified in the database.

Example: A user updates their email, so the cache updates too.

3. Manual Invalidation

Developers clear caches manually when needed.

Example: Clearing product listings cache after a big sale.

Caching Strategies

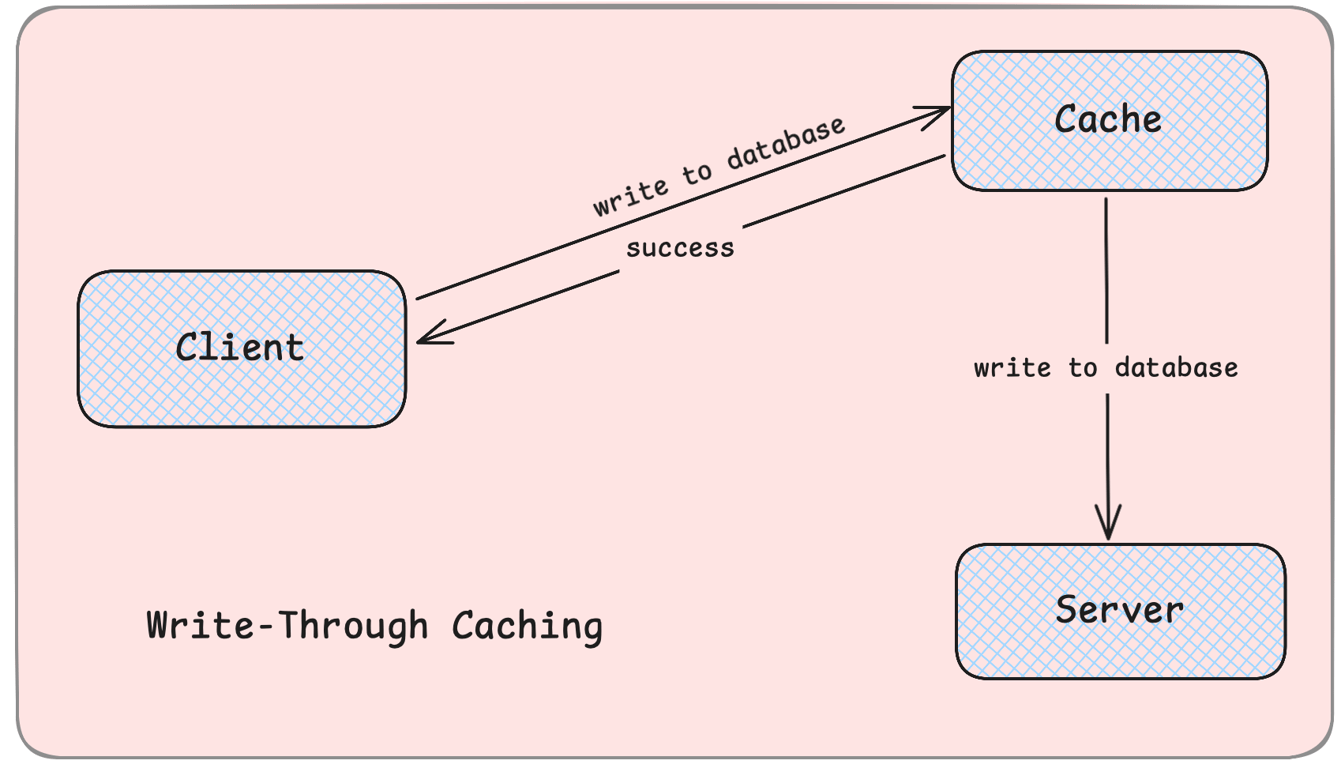

1. Write-Through Caching – Immediate Sync

Data is written to both the cache and the database simultaneously.

Ensures consistency but can slow down writes.

Example: A banking app updates account balances in both cache and database.

✔ Pros: Data is always fresh. ✖ Cons: Slower write operations.

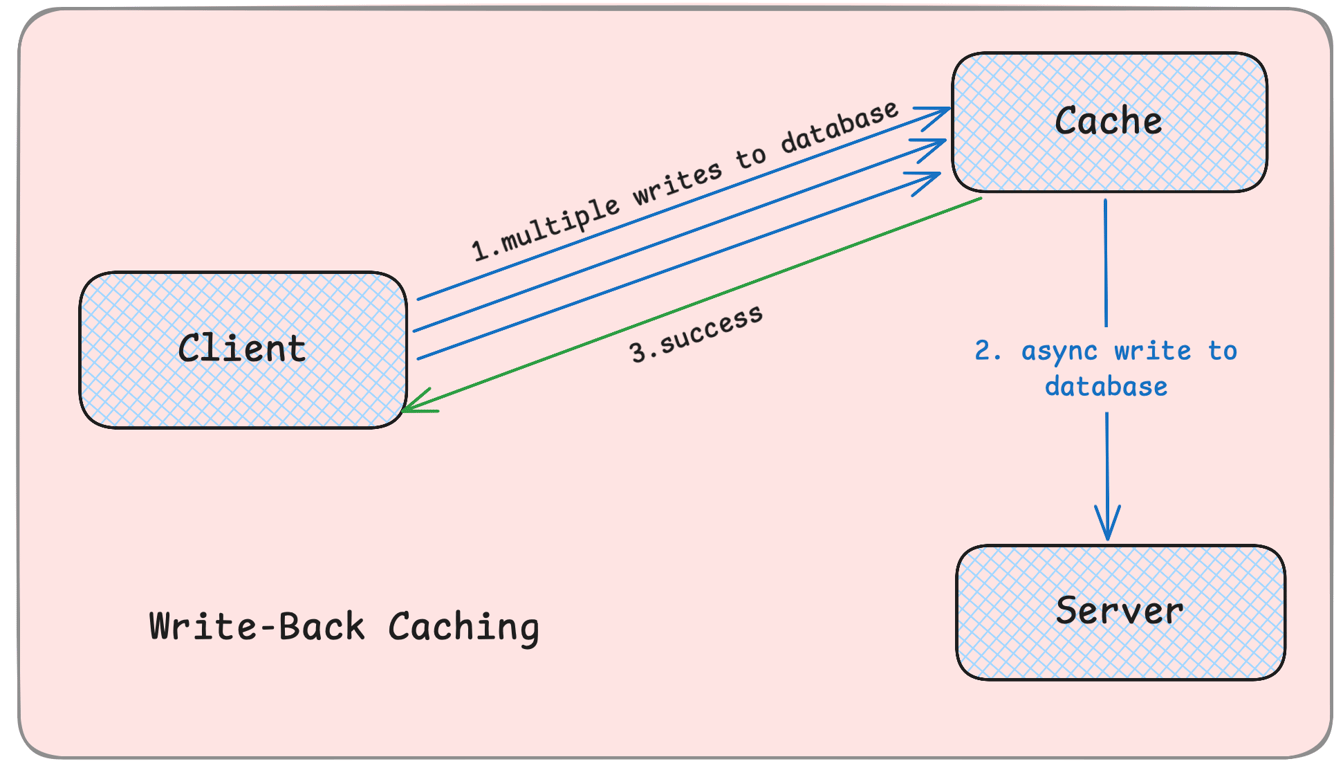

2. Write-Back Caching – Faster Writes

Data is first written to the cache, then asynchronously updated in the database.

Improves write speed but risks data loss if the cache fails.

Example: Online gaming leaderboards store scores in cache before updating the database.

✔ Pros: Faster write performance. ✖ Cons: Risk of data loss if cache crashes.

Real-World Use Cases

1. E-Commerce Websites

Caching product details reduces database calls, making pages load instantly.

2. Social Media Feeds

Feeds are cached so users don’t wait for fresh content every time.

3. Streaming Services

Popular videos are cached close to users to reduce buffering.

Conclusion

Caching speeds up applications, reduces database load, and enhances scalability.

Key techniques include cache invalidation, write-through, and write-back caching to maintain data accuracy.

Next, we’ll explore Distributed Caching – Redis, Memcached, CDN-based caching.